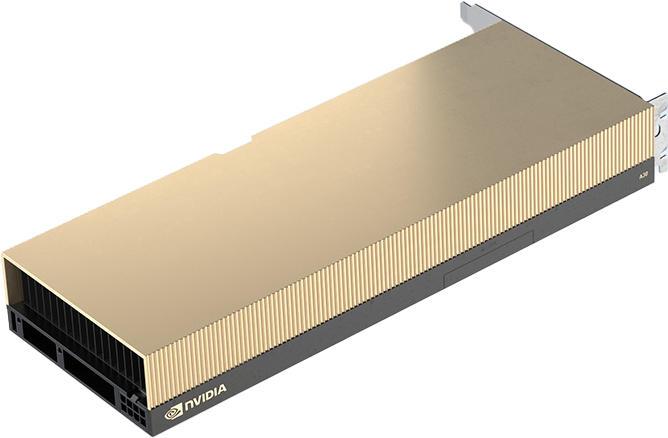

NVIDIA A30

GPUNeevCloud brings you the cutting-edge NVIDIA A30 Tensor Core GPU—a versatile accelerator engineered for mainstream enterprise workloads. Built on the NVIDIA Ampere architecture and equipped with 24GB of HBM2 GPU memory, the A30 delivers superior performance for AI inference, high-performance computing (HPC), and data analytics in a cloud environment.

Start Your AI Journey Today!