Want to get your hands on the most powerful supercomputer for AI and Machine Learning? You've come to the right place.

Mixture of Experts (395 Billion Parameters)

Projected performance subject to change. Training Mixture of Experts (MoE) Transformer Switch-XXL variant with 395B parameters on 1T token dataset | A100 cluster: HDR IB network | H100 cluster: NVLink Switch System, NDR IB.

Megatron Chatbot Inference (530 Billion Parameters)

Projected performance subject to change. Inference on Megatron 530B parameter model chatbot for input sequence length=128, output sequence length=20 | A100 cluster: HDR IB network | H100 cluster: NVLink Switch System, NDR IB.

Projected performance subject to change. 3D FFT (4K^3) throughput | A100 cluster: HDR IB network | H100 cluster: NVLink Switch System, NDR IB | Genome Sequencing (Smith-Waterman) | 1 A100 | 1 H100.

Our infrastructure is purpose built to solve the toughest AI/ML and HPC challenges. You gain performance and cost savings to efficiently train large scale models. Accelerating your deep learning and machine learning tasks effortlessly.

Use Nvidia H100 for precision tuning of pre-trained models to suit your needs. Accelerate the fine-tuning process, allowing for faster iterations and maximizing resource utilization.

Introducing a cutting-edge solution engineered to elevate your inferencing experience. Our platform boasts exceptional throughput, ensuring rapid processing of data. Embrace seamless, high-performance inferencing with our innovative solution.

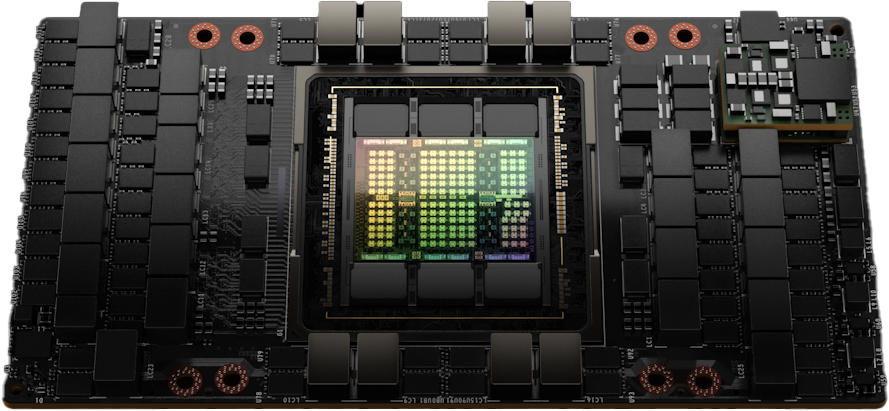

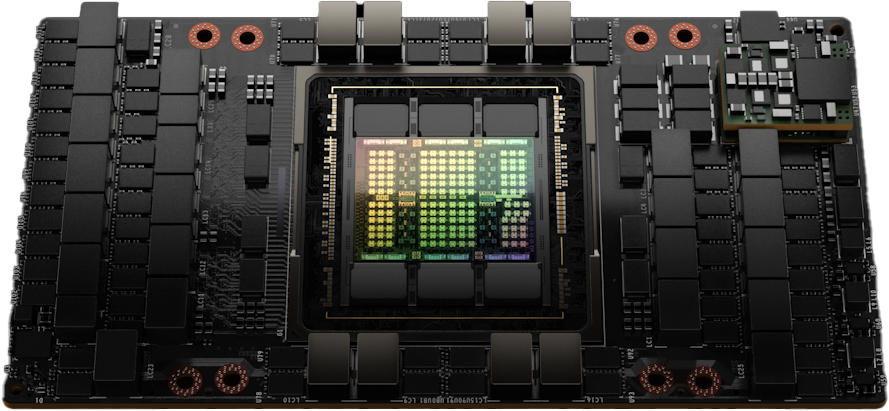

NVIDIA HGX H100 80GB SXM5 Accelerators

Gbps of GPUDirect InfiniBand Networking

GPU Memory

DDR4 RAM

vCPUs

NVMe Storage

Our super clusters are meticulously designed for peak performance, leveraging InfiniBand NDR networking and rail optimization. With support for NVIDIA SHARP in network collections, our clusters ensure seamless communication and minimal latency, eliminating bottlenecks in your training workflows. Trust NeevCloud to optimize your training experiments, delivering maximum compute per dollar and enabling cost-effective AI innovation.

Experience unparalleled storage flexibility through NeevCloud's zero ingress/egress fee structure, providing NVMe, SSD, and Object Storage options tailored to your workloads. Our NVMe Shared File System tier ensures lightning-fast performance with high IOPS per Volume, while NVMe-accelerated Object Storage seamlessly integrates with compute instances. Choose NeevCloud for hassle-free, adaptive storage solutions.

Stop struggling with on-prem deployments and confusing cloud options. NeevCloud offers all-in-one solutions for optimized distributed training at scale. Benefit from leading tools like Determined.AI and SLURM, pre-configured and ready to go. Plus, access our team of ML engineers for expert assistance at no extra cost. Choose NeevCloud for seamless, hassle-free and fast deployment.

Our Expert will contact you shortly.